- Anywhere

Vespa AI – Enterprise-Scale AI Search Platform

Vespa AI is a powerful AI search and vector database platform built for large-scale, real-time applications. It combines text, structured data, and vector search with machine-learned ranking to power next-gen search, RAG, recommendations, and personalization at enterprise speed.

Vespa AI Key Features:

- Hybrid search with text, vectors, and structured data

- Integrated machine-learned ranking and real-time inference

- Scale to billions of items & thousands of queries/sec

- Built-in tensor support for complex ranking and decisioning

- Streaming search mode for personal/private data at lower cost

- Ideal for RAG, recommendations, and semi-structured navigation

Who Should Use Vespa AI?

Best for enterprises, AI engineers, and developers building advanced search engines, recommendation systems, or generative AI pipelines that need low latency and massive scalability.

Why It’s Unique?

Unlike traditional vector databases, Vespa natively combines vector search with machine-learned ranking, structured filters, and distributed inference. This lets you deploy hybrid search, RAG, and recommendation systems at any scale—without sacrificing speed or relevance. Vespa is designed for production-grade, mission-critical AI applications.

Related AI Tools

Kiro AI

Category: Dev & Coding

Kiro – Spec-Driven Agentic AI Coding Platform Kiro is an agentic AI development platform that transforms natural prompts into structured…

Price starts from Free

AILandingPage

Category: Dev & Coding

AILandingPage – AI Landing Page Builder for Businesses AILandingPage helps you create professional, conversion-focused landing pages using AI. Generate layouts,…

Price starts from Free

Angie by Elementor

Category: Dev & Coding

Angie – Agentic AI for WordPress Angie is an agentic AI built for WordPress that completes real site tasks in…

Price starts from Freemium

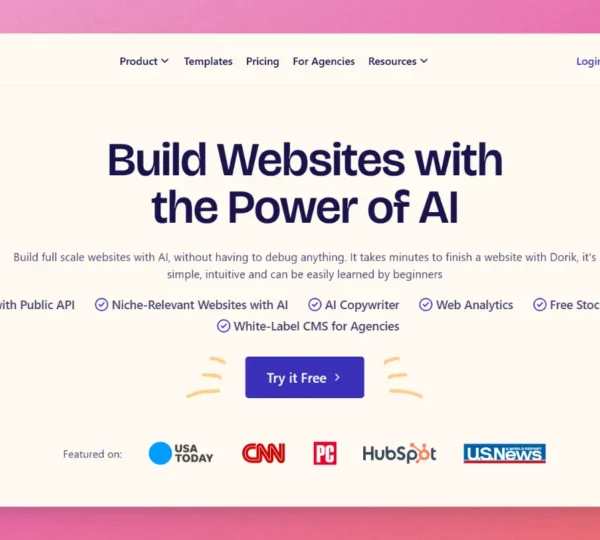

Dorik

Category: Dev & Coding

Dorik – AI Website Builder Dorik is an AI-powered no-code website builder that helps anyone create full-scale, professional websites in…

Price starts from Freemium

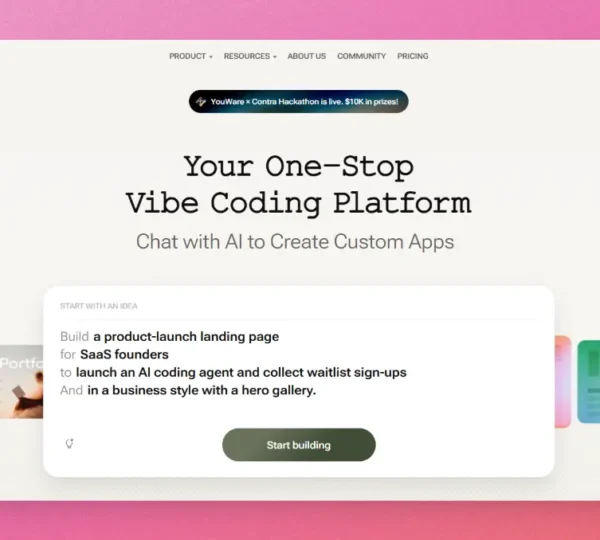

YouWare

Category: Dev & Coding

YouWare – Your One-Stop Vibe Coding Platform YouWare is an all-in-one AI vibe coding platform that lets you create complete…

Price starts from Free

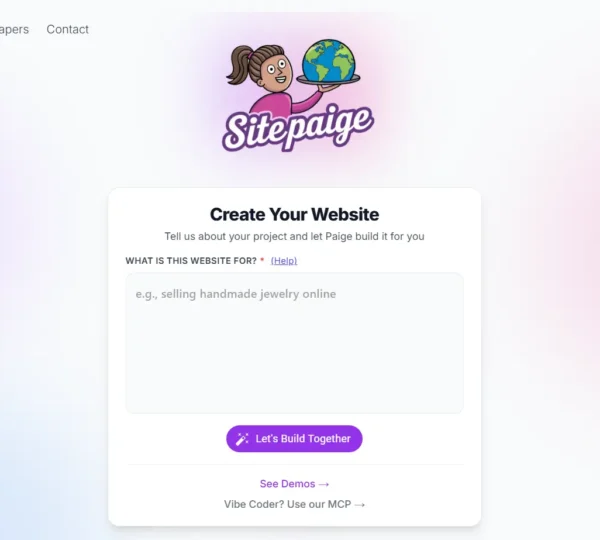

SitePaige

Category: Dev & Coding

SitePaige – Your Personal AI Web Developer Meet SitePaige, the AI web developer that builds your entire website — frontend,…

Price starts from Freemium